In many organizations, scanned documents still play a central role in day-to-day operations—think of patient intake forms, field surveys, or inspection checklists. While digitization has become the norm, extracting actionable information from these scanned forms is still frustratingly manual.

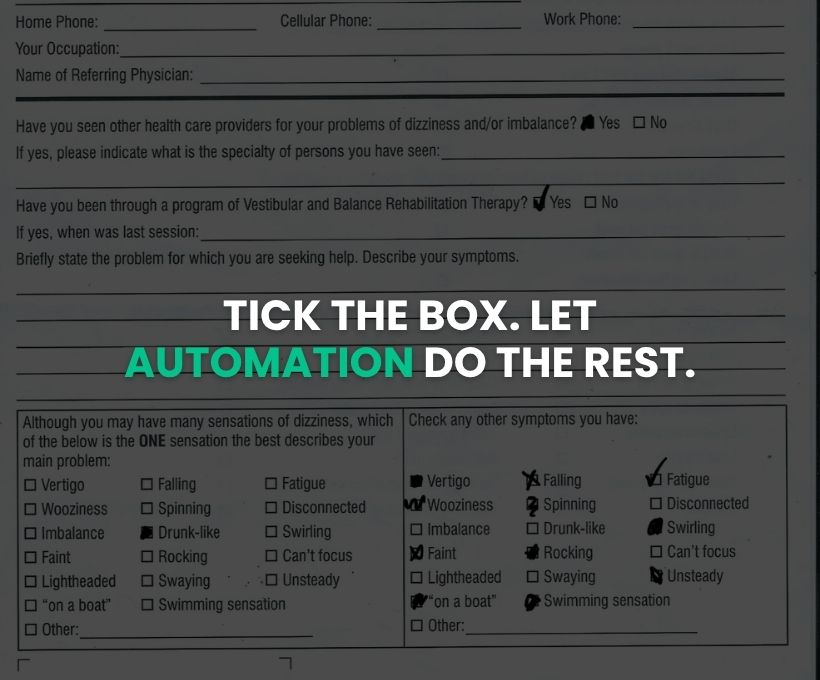

I recently completed a project that tackled this very challenge: extracting specific answers marked by tick marks from scanned forms. It’s a small visual cue, often overlooked by machines, but critically important to human decision-making. My job was to make it machine-readable—and more importantly, actionable at scale.

📌 The Business Pain Point

A client managing thousands of scanned documents needed to extract specific responses—those indicated by checkmarks or tick marks. Whether it was patient forms in a hospital or customer feedback in a service-based company, teams were spending hours combing through files, identifying responses manually.

The volume wasn’t the only issue—inconsistency in forms, tick mark shapes, and the way answers were placed made it nearly impossible to standardize the process. Human reviewers often missed answers or had to go line-by-line, increasing both the workload and the potential for error.

This wasn’t just inefficient. It was costly. And it was unsustainable as document volume grew.

💡 The Thinking Behind the Automation

Instead of trying to interpret entire forms or train a complex document AI model, I focused on the actual visual signal—the tick mark.

I built an automation pipeline using image processing and OCR that did one specific thing really well:

It detects tick marks and pulls the text next to them.

Using OpenCV for template matching, the system identifies tick marks—even if their shapes vary slightly. Then, with pytesseract (OCR), it reads the text in close proximity to each detected mark. I also made the tool robust by supporting both single-template and multi-template detection modes, giving flexibility for different form types.

The result? A simple script that turns a cluttered scanned document into structured, usable data in just seconds.

⏱️ What Changed for the Client

Before the automation:

- Teams spent hours daily reviewing printed forms.

- Responses were sometimes missed due to fatigue or oversight.

- The process couldn’t scale without hiring more data entry staff.

After the automation:

- The same volume of forms is processed in minutes, not hours.

- Accuracy improved because visual detection doesn’t tire.

- The client can scale their operations without increasing headcount.

In one specific case, what previously required 4–6 hours of manual review now takes under 10 minutes—with higher accuracy and no fatigue.

🌍 Why This Matters

Tick marks may seem minor, but they represent decisions—Yes or No, Selected or Skipped. In industries like healthcare, education, or public service, getting those answers fast can drive better outcomes.

This kind of automation isn’t just a time-saver. It’s a force multiplier. It gives teams more breathing room to focus on work that needs judgment, not just eyeballs. And most importantly, it’s adaptable—this solution can plug into other use cases like checklists, ballots, or any scanned form-based process.

🤝 Interested in Exploring Automation?

I built this project for a client, but its impact reaches far beyond one organization. If your team is still reviewing scanned forms manually—or missing important data hidden in plain sight—automation might be the edge you’re looking for.

Want to explore how a similar solution could work for your workflow?

📩 Feel free to reach out or start a conversation—I’m always open to new ideas and collaborations.