Table of Contents

- Introduction

- Key Highlights of the Lecture

- Application to My Multi-Category Reviews Classifier Project

- Takeaways and Next Steps

- Invitation to Collaborate

Introduction

Lecture 22 of the Innoquest Cohort-1 AI/ML training by Innovista was a deep dive into the world of Large Language Models (LLMs). The session illuminated their significance, mechanisms, and applications, while laying a strong foundation for practical fine-tuning tasks. This post shares my learnings and reflections from the lecture, highlighting insights that are enhancing my ongoing projects.

Key Highlights of the Lecture

What Are LLMs and Why We Need Them

The lecture began with an overview of Large Language Models, explaining their role in solving complex tasks and their evolution from traditional Small Language Models (SLMs). The necessity of LLMs was framed within the context of Moore’s Law, emphasizing how advancements in model size, compute, and data lead to remarkable performance gains.

The “Bigger Is Better” Paradigm

A pivotal discussion revolved around a landmark paper by OpenAI, which demonstrated a linear improvement in model accuracy with increasing size and compute. This “Bigger Is Better” principle underpins the development of state-of-the-art models, underscoring performance scalability with resources.

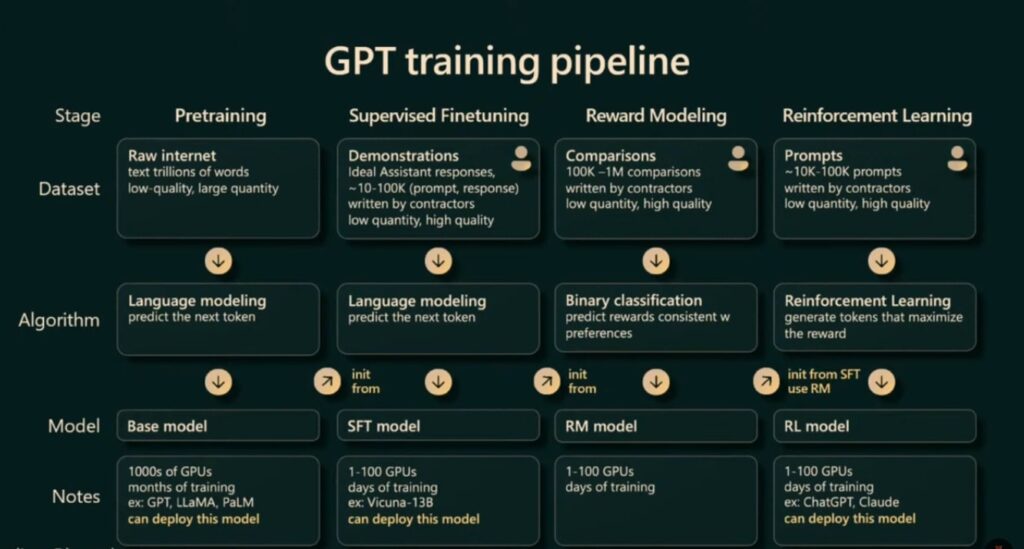

Pipeline of Building and Fine-Tuning LLMs

The detailed pipeline of LLM development was a highlight:

- Base Model Development: Focused on next-word prediction tasks.

- Soft Language Models (SFLs): Fine-tuned for querying and multi-task conversational capabilities.

- Reinforcement Learning: Used to further refine the model, mitigating biases and improving response rationality.

Model Fine-Tuning

Tailoring base models to specific use cases is a crucial step that aligns model responses with desired behavior without adding new knowledge. This ensures data security and allows us to adapt models to solve unique problems effectively.

Reinforcement Learning in LLM Refinement

The lecture emphasized the value of reinforcement learning in addressing irrational outputs and biases, making LLMs more robust and trustworthy for real-world applications. This step bridges the gap between fine-tuning and deployable solutions.

Application to My Multi-Category Reviews Classifier Project

The lecture’s practical component focused on fine-tuning Llama-7B using the Troll dataset. While the teacher provided a training notebook, the assignment required us to:

- Train the LLM with the dataset.

- Use the fine-tuned checkpoint for inference.

Having previously fine-tuned a transformer, I am confident in applying these techniques to complete the task. This exercise directly feeds into my multi-category reviews classifier project, equipping me to design an optimized, task-specific solution.

Takeaways and Next Steps

Key Learnings

- The scalability of LLMs directly correlates with performance improvements.

- Fine-tuning enhances usability without compromising data security.

- Leveraging the art of Fine-tuning – A highly valuable thing in solving real-world use cases.

- Reinforcement learning ensures bias mitigation and response rationality.

Next Steps

- Complete the fine-tuning assignment.

- Integrate insights from the lecture into my reviews classifier project.

- Explore advanced use cases of fine-tuned LLMs.

Invitation to Collaborate

I believe in the power of shared knowledge and collaborative innovation. If this post resonates with your goals or inspires ideas for mutual growth, feel free to connect. Let’s explore opportunities to create impactful solutions together.